The discourse surrounding artificial intelligence is often framed as an unstoppable force of nature, like a technological tsunami set to either obliterate or upgrade society.

Projections from firms like Goldman Sachs forecast a 7% boost to global GDP, driven by immense productivity gains. Simultaneously, warnings from trade unions and technologists alike paint a grim picture of mass job displacement and the erosion of humanness.

This binary of utopia versus dystopia, however, is a far too simple narrative. It obscures a more fundamental truth.

The future is not something that happens to us, but something we build.

The impact of AI will not be determined by the technology itself, but by the economic logics and human values we embed within it. And we are at a fork in the road.

Down one path lies a system optimised for pure efficiency and shareholder value. Essentially a continuation of the last forty years of economic thinking, this is now armed with an unprecedented tool for automation.

Down the other lies an economy geared towards augmentation and purpose. One that uses AI to solve human problems and unlock new forms of creativity and enterprise. I believe that in order to understand the choice we face, we must analyse these two potential futures as competing business models and social structures being built in real-time.

Future A - the efficiency engine

This future represents the path of least resistance. It’s the logical endpoint for a business culture fixated on maximising short-term profits.

In this model, AI is primarily a tool for cost reduction. The prediction that 50% of entry-level office roles could be automated is not a problem to be solved, but an opportunity to be seized. The goal is to strip processes down to their most efficient, predictable, and inexpensive components.

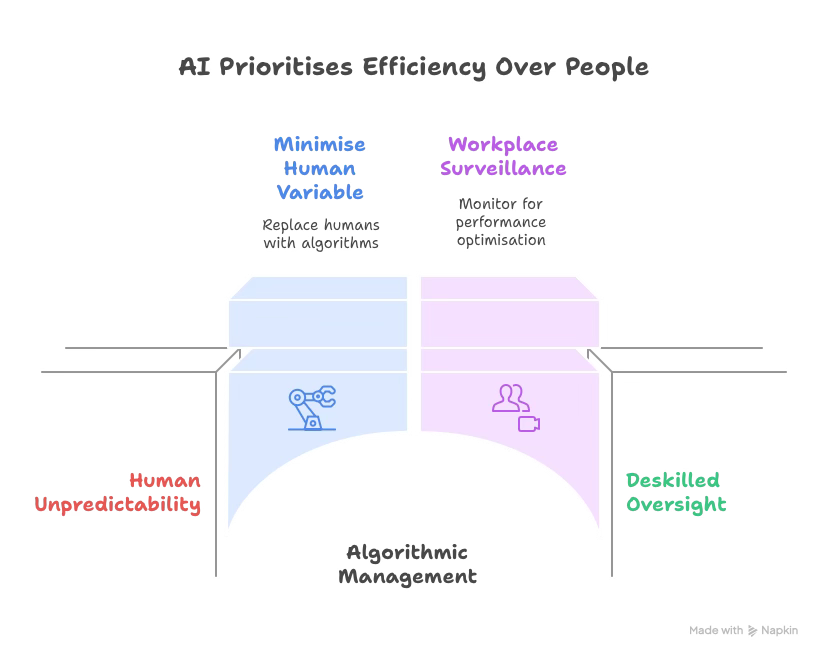

Here, the concept of Human Capital Risk Management takes on a cynical tone. As outlined by platforms like FasterCapital, this practice involves mitigating people-related risks, but in the Efficiency Engine, the greatest risk is human unpredictability itself.

The solution is to minimise this variable. Humans are managed, monitored, and increasingly replaced by algorithmic systems. The European Trade Union Confederation (ETUC) warns that this approach "threatens the value of human labour" and "widens inequalities".

It’s a future where AI, trained on biased historical data can reinforce societal prejudices in hiring, promotion, and dismissals, all under a veneer of objective, data-driven decision-making.

Workplace surveillance becomes normalised as a necessary tool for performance optimisation. This raises profound privacy concerns, echoing the ETUC’s calls for workers to have control over their data. This future automates management.

It creates a world of deskilled oversight, where the primary human function is to validate the machine’s output, leading to an erosion of deep expertise and professional autonomy.

Future B - the purpose economy

The alternative path requires a more imaginative economic logic, built on the counter-intuitive premise articulated by innovators like Jonathan Ross of Groq: that truly powerful AI will not cause mass unemployment, but "labour shortages".

This theory rests on a virtuous cycle:

...speed and accessibility in AI lead to better, faster answers, which in turn creates explosive demand for new products, new services, and new ideas that were previously inconceivable.

In this future, AI is a catalyst for augmentation. When a customer service agent’s productivity is boosted by 14% as one study found, the goal isn't to reduce headcount by 14%. It’s to free up that agent’s time to solve more complex, high-value problems that build customer loyalty.

Or think of a graphic designer, augmented by AI tools to explore thousands of visual concepts in minutes, freeing them to focus on the strategic and emotional core of a brand's identity. This is a strategic shift from managing costs to expanding capabilities and unlocking new frontiers of human creativity.

This model depends on democratising access to the tools of creation. Ross’s mission to drive the cost of compute towards zero, making it a utility like electricity, is central to this vision. When anyone with an idea can access powerful AI, it “unlocks billions of people”, enabling them to build businesses that "weren't even profitable before".

It fosters a resilient, decentralised economy of specialists, creators, and community builders. This path necessitates the creation of entirely new roles as a core function of a healthy ecosystem.

We will need thousands of Model-Bias Auditors to ensure fairness, skilled Prompt Engineers with deep communication expertise, and AI ethicists to guide development. This is a future that invests in human skills, particularly those resistant to automation, critical thinking, emotional intelligence, and complex problem-solving.

It’s a system designed not just for efficiency, but for innovation, resilience, and human flourishing.

It isn’t the tech; it's the rules

The path we take will ultimately be determined by the systems of power and accountability we build around the technology. As tech journalists like Karen Hao have extensively documented, the current AI industry is a highly concentrated "Empire" dominated by a few US and Chinese tech giants. This concentration of power naturally favours the Efficiency Engine model.

To steer towards the Purpose Economy, a robust governance framework is non-negotiable. The ETUC’s call for a "Directive on Algorithmic Systems in the Workplace" to enforce a human-in-control principle is a vital first step.

In this context, the recent withdrawal of the EU’s AI Liability Directive is a significant setback. Without clear liability, who pays the price when an AI-driven medical diagnosis is wrong, or a recruitment algorithm illegally discriminates? The risk falls on the individual while the profit remains with the corporation.

Furthermore, we must look at the entire global value chain. The gleaming, minimalist interfaces of the AI tools we use are often built on the maximalist, repetitive, and poorly compensated labour of thousands of people. It forces us to ask a difficult question:

Are we comfortable with a future built on a foundation of digital-era exploitation?

And then there is the fundamental issue of sustainability. Training LLMs consumes vast amounts of energy and water. This is an environmental constraint that cannot be ignored. The solution lies in both policy such as mandatory reporting on AI's environmental footprint, and innovation, like the development of more energy-efficient hardware.

The future of AI is inseparable from the future of our planet.

How to read the map

I’m certain that understanding this fork in the road is the first step towards making a conscious choice. As citizens, employees, and leaders, we must become adept at analysing the AI systems being deployed around us. The following questions move beyond a simple good-or-bad binary to provide a framework:

On power and profit: Who funded this technology? Who owns the data? Who profits from its deployment? Is the value created by efficiency gains being reinvested in the workforce and innovation, or is it being extracted for shareholders?

On people and purpose: Does this tool augment human capability or replace it? Does it create new skills or de-skill its user? Does it increase worker autonomy, or does it enable greater surveillance and control?

On accountability and governance: Who is liable when this system fails or causes harm? Are the decisions it makes transparent and contestable? Does its development consider the entire supply chain, from data labelling to energy consumption?

The narrative that AI is an uncontrollable force serves those who benefit from a lack of oversight. The truth is that we are all architects of the coming age of AI.

By demanding answers to these questions, we can begin to steer our collective future down the path of purpose. I'm curious to know what new possibilities you see on the horizon.

This series, "The Soul of the New Machine", is my personal exploration of how we can preserve our humanity in an age increasingly governed by code.

Hi, I'm Miriam - an AI ethics writer, analyst, and strategic SEO consultant.

I break down the big topics in AI ethics, so we can all understand what's at stake. And as a consultant, I help businesses build resilient, human-first SEO strategies for the age of AI.

If you enjoy these articles, the best way to support my work is with a free or paid subscription. It keeps this publication independent and ad-free, and your support means the world. 🖤