The Automation of You (How AI and Algorithms Erode Critical Thought)

Our daily pursuit of a frictionless life is quietly costing us our ability to think, decide, and connect with the world.

The other week, I was driving my six-year-old to a birthday party in a part of town I barely knew. Without a moment’s thought, I tapped the address into my phone, and a calm, disembodied voice began dictating the route. Turn left here, merge onto the A road, at the junction, take the first exit. I obeyed without question.

It was only when I arrived, perfectly on time, that a strange feeling crept over me. I had absolutely no memory of the journey itself; I couldn’t have retraced my steps if I tried. I hadn’t been navigating; I had simply been following orders.

It was a small thing, a mundane moment in a busy week. But it stayed with me. It felt like a tiny glimpse into a much larger trade we are all making, every single day. We are handing over the cognitive labour of making choices, big and small, to algorithms. And I have to ask myself:

Are we sleepwalking into a world where we no longer know how to decide for ourselves?

The allure of cognitive offloading

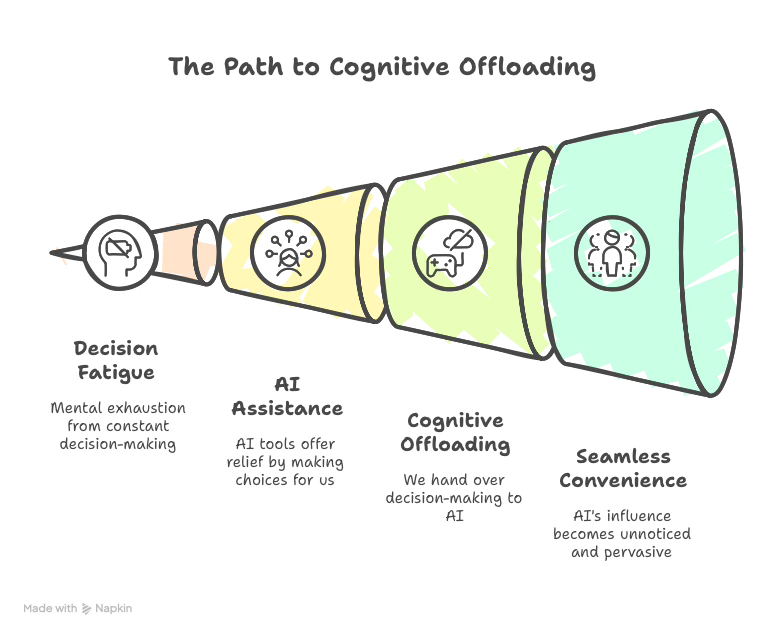

Let’s be honest, decisions are hard. They are mentally taxing. The sheer volume of choices we face in a typical day can lead to what psychologists call decision fatigue.

From deciding on a marketing strategy at work to choosing what to make for dinner, it all consumes finite mental energy. So when a streaming service saves us from an evening of scrolling by serving up the perfect show, or an online shop curates a list of things it knows we’ll love, it feels less like a surrender and more like a relief.

This is the seductive bargain of artificial intelligence. We engage in a kind of cognitive offloading; we hand over a piece of our thinking to a machine to free up our own mental bandwidth. And as a busy parent and business owner, I’ll be the first to admit that this can feel like a superpower.

The ecosystem of these decision-makers is vast; research shows that while only a third of people think they use AI, over three-quarters of us use an AI-powered service every single day. It’s the smart reply function suggesting how to answer an email, the navigation app finding the fastest route, and the spam filter deciding which messages are even worthy of our attention.

The convenience is so seamless that we rarely stop to consider the cumulative effect. The algorithm that picks your music is owned by the same handful of companies whose other algorithms are filling your news feed, suggesting who you should date, and even filtering the job applications for your next career move.

Each outsourced decision is a single, seemingly insignificant thread, but woven together they create a fabric that quietly directs the course of our lives.

Where is the line between a helpful shortcut and a path we follow blindly?

The cognitive toll of convenience

The relationship we’re building with these algorithmic assistants is not cognitively neutral. While the benefits are immediate, a growing body of research indicates that this convenience comes at a significant cost. In our quest for frictionless living, we may be systematically deskilling ourselves in the core competencies that we consider fundamentally human.

The most documented cost is the degradation of critical thinking. Studies have established a clear negative link between frequent AI tool usage and performance on critical thinking tests. By delegating the heavy lifting of problem-solving, research, analysis, and evaluation, we bypass the effortful cognitive processes that build and maintain those intellectual muscles.

It’s particularly concerning for young people, who are growing up in an environment where an AI-generated answer is always available. The danger is that we are fostering an illusion of understanding; a sense of knowledge without the cognitive work required to earn it.

This extends beyond how we think to what we think about. The personalised news feeds on social media are designed to maximise our engagement by showing us content we are likely to agree with. This creates what are known as filter bubbles, which amplify our own biases and reduce our ability to engage with different perspectives.

Our emotional lives are also being engineered. Algorithms have learned that emotionally charged content, posts that generate outrage or anxiety, are highly effective at holding our attention. This can lead to a state of emotional dysregulation, where we become habituated to a state of heightened reactivity driven by a platform’s needs, not our own.

Our ability to focus, to remember, and even to learn social norms is being subtly altered by this new environment. It’s a bit like the difference between using a tool and living in an ecosystem. A tool is passive; you pick it up and put it down.

An ecosystem, however, constantly and subtly shapes the organisms within it. And the digital ecosystem we now inhabit is optimised not for our well-being, but for platform-defined metrics like engagement.

What does it mean for our humanity when our very thought processes are being reshaped to better suit the needs of a machine?

The rise of algorithmic authority

This isn't just about convenience; it’s about a new kind of power. It's what academics call algorithmic authority; the power of algorithms to manage human action and govern our lives, often invisibly. This authority is most potent when it operates from within a "black box".

Having spent over a decade in SEO, I’m intimately familiar with the concept of a black box. It’s an opaque system where you can see the inputs and the outputs, but you cannot see the internal logic. You can’t ask a search engine why it ranked one page higher than another. Now, imagine that same lack of transparency applied to life-altering decisions.

This is happening right now. In the gig economy, algorithmic management systems assign tasks, set pay rates, and can even fire workers by deactivating their accounts, often with no human manager involved. But the stakes become even higher when this unaccountable authority makes judgments about our character, our opportunities, and our freedom.

The theoretical concerns become tangible when we look at the evidence. Here are just a few documented cases:

In hiring, Amazon had to scrap an AI recruiting tool after discovering it had taught itself that male candidates were preferable. The system, trained on a decade of historically male-dominated data, systematically penalised CVs that included the word "women's" and downgraded graduates from two all-women's colleges. It automated the company's past biases.

In finance, a UC Berkeley study found that fintech lending algorithms charged African American and Latinx borrowers significantly higher interest rates than their white counterparts with similar credit profiles.

This practice of "digital redlining" costs minority communities an estimated $450 million (£330 million) in extra interest every year, directly exacerbating the racial wealth gap. The algorithms use proxies like your postcode or even your phone's operating system to make discriminatory predictions.

In criminal justice, the COMPAS algorithm, a risk-assessment tool used in US courtrooms, was found by a ProPublica investigation to be deeply biased. It falsely flagged Black defendants as being at high risk of reoffending at nearly twice the rate of white defendants.

These scores, presented with a veneer of scientific objectivity, carry immense weight in sentencing and bail decisions.

In healthcare, a risk prediction algorithm used by US hospitals to identify patients needing extra care was found to be racially biased. It used a patient's past healthcare costs as a proxy for their level of illness.

Because less money is historically spent on Black patients due to systemic inequities, the algorithm systematically underestimated their health needs, recommending healthier white patients for extra care ahead of sicker Black patients.

What these cases reveal is that the AI system is often not "broken"; it’s working perfectly by learning and amplifying the inequalities present in our society.

The output of one biased system becomes the input for the next, and it creates a digital prison of intersecting disadvantages.

Reclaiming our agency

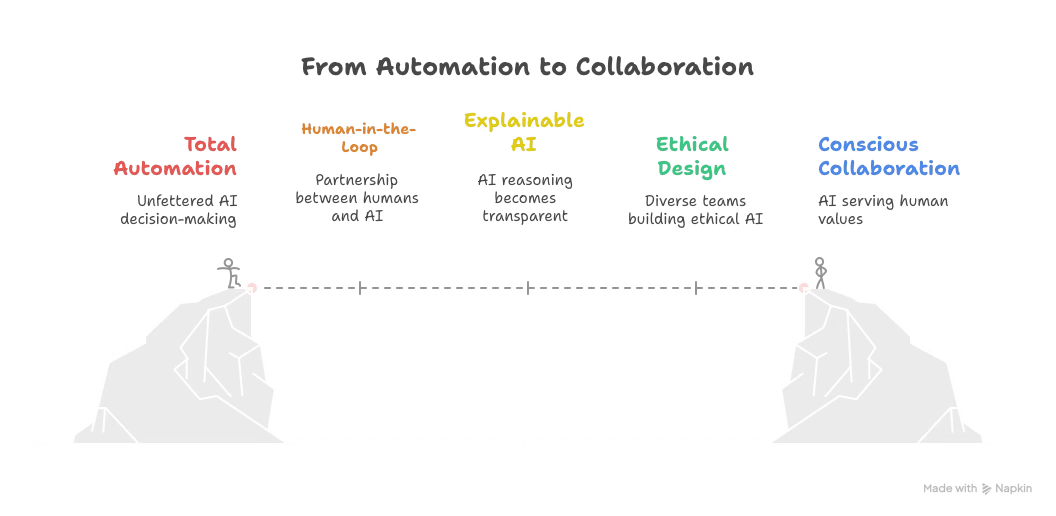

In the face of this, our challenge is not to halt technology but to steer it in a direction that serves human values. This requires a fundamental shift away from a paradigm of total automation towards one of conscious collaboration.

The most effective solution is to insist on a Human-in-the-Loop (HITL). This is a design philosophy where humans and AI work in partnership. AI excels at processing vast datasets with speed and consistency; humans excel at understanding context, exercising ethical judgement, and adapting to novel situations.

A well-designed HITL system uses AI to augment human intelligence, not replace it. It flags low-confidence or ethically sensitive decisions for human review, ensuring a person with the expertise and authority to intervene makes the final call. This principle of Meaningful Human Control is a cornerstone of emerging regulations like the EU's AI Act.

Alongside this, we need to push for Explainable AI (XAI). This is the technical quest to open the black box and make an AI's reasoning transparent. If we can understand why an AI made a recommendation, we can challenge its fairness and correctness. While XAI is not a perfect solution, there can be a trade-off between a model's performance and its transparency.

Ultimately, this is a societal challenge. It requires robust policy and a commitment to ethical design. It means ensuring the teams building AI are diverse and inclusive, to avoid the "white guy problem" that bakes a narrow worldview into our technology.

And it requires us, as individuals, to resist the slide into passive consumption. We must cultivate an awareness of how these systems are designed to influence us and make deliberate choices about when to embrace their convenience and when to insist on exercising our own judgment.

The journey from a Spotify playlist to a biased sentencing tool is shorter and more direct than we might imagine. The path is paved with a series of small, seemingly rational trade-offs. Looking at the evidence, from the subtle shaping of your thoughts to the life-altering judgments made in your name, where do you draw the line?

So, I’ll leave you with a question, a small piece of personal research.

What's one decision you've recently outsourced to an algorithm? How did it feel? Share your story in the comments.

This series, "The Soul of the New Machine", is my personal exploration of how we can preserve our humanity in an age increasingly governed by code.

Hi, I'm Miriam - an AI ethics writer, analyst, and strategic SEO consultant.

I break down the big topics in AI ethics, so we can all understand what's at stake. And as a consultant, I help businesses build resilient, human-first SEO strategies for the age of AI.

If you enjoy these articles, the best way to support my work is with a free or paid subscription. It keeps this publication independent and ad-free, and your support means the world. 🖤