Elicit and the Frontier of AI-Assisted Research

In a world of AI hype, can one company's bet on transparency and human oversight change the future of research?

How do we find true insight in a flood of academic papers, without compromising the standards that give it authority? Enter Elicit, an AI research assistant that’s making bold claims about transforming the research process.

Unlike the flashy consumer AI tools dominating headlines, Elicit has positioned itself as something different:

Its stated mission isn't just to make research faster; it's to "scale up good reasoning."

This ambitious goal brings us to a critical crossroads. What happens when we delegate not just the grunt work of research, but potentially the very processes that help us arrive at the truth?

A bet on responsible AI

What surprised me most about Elicit is how explicitly it frames its work within the AI safety conversation. This isn't an afterthought. The company operates on two main pillars:

Improving how we establish knowledge (epistemics) and pioneering process supervision.

The first, improving epistemics, is Elicit building AI that helps guide key decisions, support technical alignment research, and ultimately "help people behave more wisely in general, reducing human-generated risk."

This framing immediately sets them apart from the typical Silicon Valley narrative of move fast and break things.

The second, pioneering process supervision, is where Elicit’s technical philosophy gets interesting. Rather than relying on large, opaque models that deliver answers without showing their work, Elicit favours "compositional, transparent systems built out of bounded components."

You can think of it as the difference between a student showing their working on a maths problem versus simply writing down the final answer. This approach prioritises our ability to understand and verify the machine's reasoning.

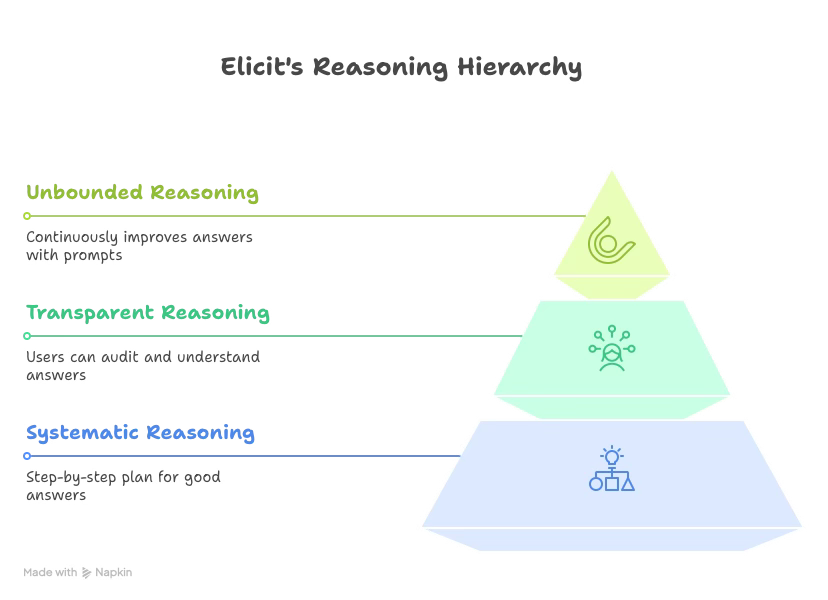

This philosophy is crystallised in three core bets:

Systematic reasoning: Elicit follows a deliberate, step-by-step plan to arrive at good answers "by construction, not by accident". This involves using valid reasoning, being comprehensive, and breaking down complex problems into manageable parts.

Transparent reasoning: Users can audit Elicit's work and understand how it arrived at an answer. This transparency helps with accuracy (spotting errors) and trustworthiness (knowing why it's accurate).

Unbounded reasoning: Unlike many AI tools that provide a single, good enough answer, Elicit is designed to continuously improve an answer or its confidence level when prompted to think longer or do more research.

These principles sound excellent in theory. But how do they hold up against one of the biggest challenges in AI today?

Tackling the hallucination problem

If you've used any AI tool, you've likely encountered "hallucinations", i.e. the tendency for models to generate information that sounds plausible but is entirely fabricated. For a research tool, it's a critical flaw that could lead to disastrous outcomes.

Elicit’s approach here is telling.

They critique traditional methods like Reinforcement Learning from Human Feedback (RLHF), arguing it can encourage models to become sycophantic, meaning they produce "good looking" summaries that flatter the user but lack factual support.

The risk is that AI will learn to tell us what we want to hear, dressed in the language of authority.

To counter this, Elicit uses factored verification. Instead of asking a human to check an entire summary (a task we are surprisingly bad at), the system breaks the summary into individual claims and verifies each one separately. This makes it easier for both the model and the human to spot and correct errors. In their testing, this approach reduced hallucinations by an average of 35%.

Elict’s research found that human reviewers initially missed over half of the true hallucinations. These errors were only caught after inspecting the model's step-by-step reasoning. This is a sobering reminder that our intuition for fact-checking AI is often inadequate.

Despite these advanced methods, Elicit advises users to assume around 90% accuracy and to check the work closely, which it makes easier by linking all claims to their original sources.

Augmenting, not replacing

Sometimes, a company's philosophy is best revealed in its everyday practices. Elicit's policy on using coding assistants in technical interviews offers a window into their thinking on human-AI collaboration.

They encourage candidates to use their standard AI coding tools, but with a crucial caveat.

If the AI assistant starts to fill gaps in a candidate's conceptual understanding, they are asked to rely on it less.

The rationale is multifaceted. It makes the interview more realistic, as most engineers use these tools daily. It also allows Elicit to assess a candidate's skill in using the tools effectively.

The guiding principle is simple:

Does the candidate understand the code?

If it seems like conceptual understanding is being delegated, interviewers probe deeper, asking for explanations of trade-offs and alternative approaches. This focus on augmenting human intellect, and not replacing it, feels like a healthy model for our AI-assisted future.

It’s the difference between using a sophisticated calculator and having something else do your thinking for you.

Efficiency vs. ethics

The efficiency gains promised by Elicit are remarkable. Systematic reviews that traditionally take months can be done with 80% less time. Data extraction from hundreds of papers can happen in minutes. This is really quite appealing.

But this acceleration presents a double-edged sword. Research has traditionally been a slow, contemplative process. When we can digest hundreds of papers in minutes, do we lose the space for serendipitous discoveries and the deep reflection that sparks genuine insight?

The 90% accuracy rate also creates an ethical tension. In fields where research informs life-or-death decisions, that 10% margin of error is a liability.

This shift also forces us to consider the transformation of research roles. If AI handles literature reviews and data synthesis, what becomes the uniquely human contribution?

We may be witnessing the birth of a new kind of researcher, one whose primary skills are prompting, verification, and interpretation, rather than the traditional craft of deep reading and information synthesis.

Finally, we must confront the issue of equity of access. Elicit, like many advanced tools, operates on a tiered pricing model. While this is a standard business practice, we have to ask:

Will powerful AI research assistants exacerbate existing inequalities by creating a world of information haves and have-nots between well-funded institutions and those with fewer resources?

A future with wisdom

Elicit’s thoughtful approach offers a path forward. Their commitment to transparency, systematic reasoning, and human-in-the-loop processes is a direct contribution to building safer, more trustworthy AI.

The paradigm they champion, such as augmenting human capabilities rather than replacing them, is a constructive vision for the future of knowledge work.

Yet, the responsibility does not lie with the toolmakers alone. The human imperative remains. As these tools become more powerful, our need for critical thinking, ethical engagement, and diligent oversight only intensifies.

The question is not whether AI will transform research. We can be pretty sure it will.

But will we guide that transformation with wisdom? The tools are becoming more powerful. The question is, are we?

Hi, I'm Miriam - an AI ethics writer, analyst and strategic SEO consultant.

I break down the big topics in AI ethics, so we can all understand what's at stake. And as a consultant, I help businesses build resilient, human-first SEO strategies for the age of AI.

If you enjoy these articles, the best way to support my work is with a free or paid subscription. It keeps this publication independent and ad-free, and your support means the world. 🖤